You can learn a lot about trends by watching how they evolve and distribute. I spent a few words on that in my recent blog article ‘How many tweets are necessary to create a trending topic?’ (sorry, in Dutch). In there, I use XQuery to analyze tweets about the announcement of the upcoming merge of my company with another. In this article I will show the code I used, and apply it to a different set of tweets: (public) tweets mentioning ‘mluc11’. I will apply similar analysis to discover the highlights of the conference, and its most interesting contributors on Twitter.

The basic idea is quite simple:

- Gather data

- Convert to some convenient format

- Make it searchable

- Apply some statistics and do calculations

- Draw graphs and conclusions

Gathering data

Twitter has quite an elaborate API, but the most useful part for this purpose –the search API– falls short. To analyze tweets you need to be able to look back over at least the past month, most likely even longer. Twitter search however only returns tweets from the past few days, limiting its usefulness quite a lot.

Twitter search does come with an RSS feed option though. That is what I used to collect little less than 600 tweets mentioning ‘mluc11’ by anyone with a public timeline. Add ‘rpp=100’ as parameter to get the max items returned per call:

I added this RSS feed a few months ago to the RSS agent I had closest at hand: Microsoft Outlook. Not my personal favorite, but I have to use it anyway (company policy).

Convert to some convenient format

From here I had two options:

- Extract the URLs and connect with Twitter API to retrieve the original tweet in XML

- Just use the RSS information, and write that as XML

Retrieving the original tweets as XML has the benefit that you can optionally include markers for mentions, urls, hashtags, and get additional details about them included in the same call as well. You need to go through a tricky OAuth process to get access to the API, however.

For the purpose of the analysis, all necessary information is already available in the RSS information, which I already had ready at hand. So, I decided to skip the hassle of accessing the API and use a bit of VBA code to write my collected RSS feed messages to XML:

Sub writeTweets()

Dim item As PostItem

Dim tweets As String

Dim url As String

Dim stamp As String

tweets = "<?xml version=""1.0"" encoding=""windows-1252""?>" & vbCrLf

tweets = tweets & "<tweets>" & vbCrLf

For Each item In ActiveExplorer.CurrentFolder.Items

url = Replace(Right(item.Body, Len(item.Body) - InStrRev(item.Body, "HYPERLINK") - 10), """Artikel weergeven...", "")

tweets = tweets & "<tweet url=""" & url & """><from>" & item.SenderName & "</from><subject>" & Replace(Replace(item.Subject, "&", "&"), "<", "<") & "</subject><stamp>" & item.ReceivedTime & "</stamp></tweet>" & vbCrLf

Next item

tweets = tweets & "</tweets>" & vbCrLf

Call WriteToFile("c:\tmp\tweets.xml", tweets)

End Sub

Sub WriteToFile(path As String, text As String)

Dim fnum As Long

fnum = FreeFile()

Open path For Output As fnum

Print #fnum, text

Close #fnum

End Sub

Make sure the mail folder containing the RSS messages is your current folder. Hit Alt + F11 in Microsoft Outlook to open the macro editor, paste the macro’s in en use F5 to run the writeTweets macro. The macro results in something like this:

<tweets>

<tweet url="http://twitter.com/jpcs/statuses/58818516685553665">

<from>jpcs (John Snelson)</from>

<subject>RT @StephenBuxton: Spent last 2 weeks reviewing and rehearsing talks for #MLUC11 (San Francisco April 26-29). Great content - it's going to be a great show!</subject>

<stamp>15-4-2011 11:06:41</stamp>

</tweet>

<tweet url="http://twitter.com/jpcs/statuses/58818417511243776">

<from>jpcs (John Snelson)</from>

<subject>RT @SCUEngineering: Interested in non-relational database technologies? "MarkLogic InsideHack 2011" (4/28 - FREE, SF) http://mluc11-insidehack.eventbrite.com/</subject>

<stamp>15-4-2011 11:06:18</stamp>

</tweet>

…

A link to the full copy of tweets as XML can be found at the end of this article.

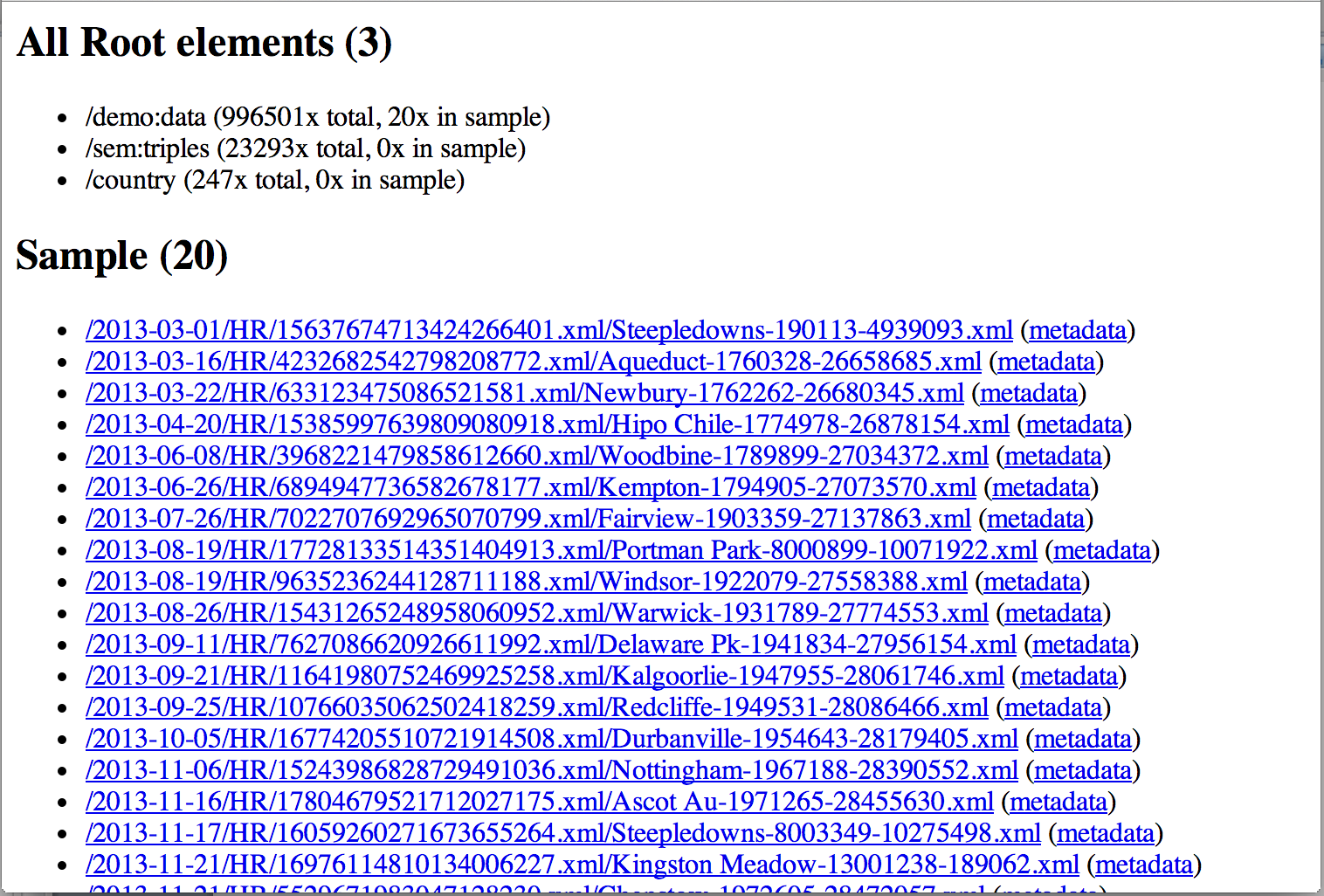

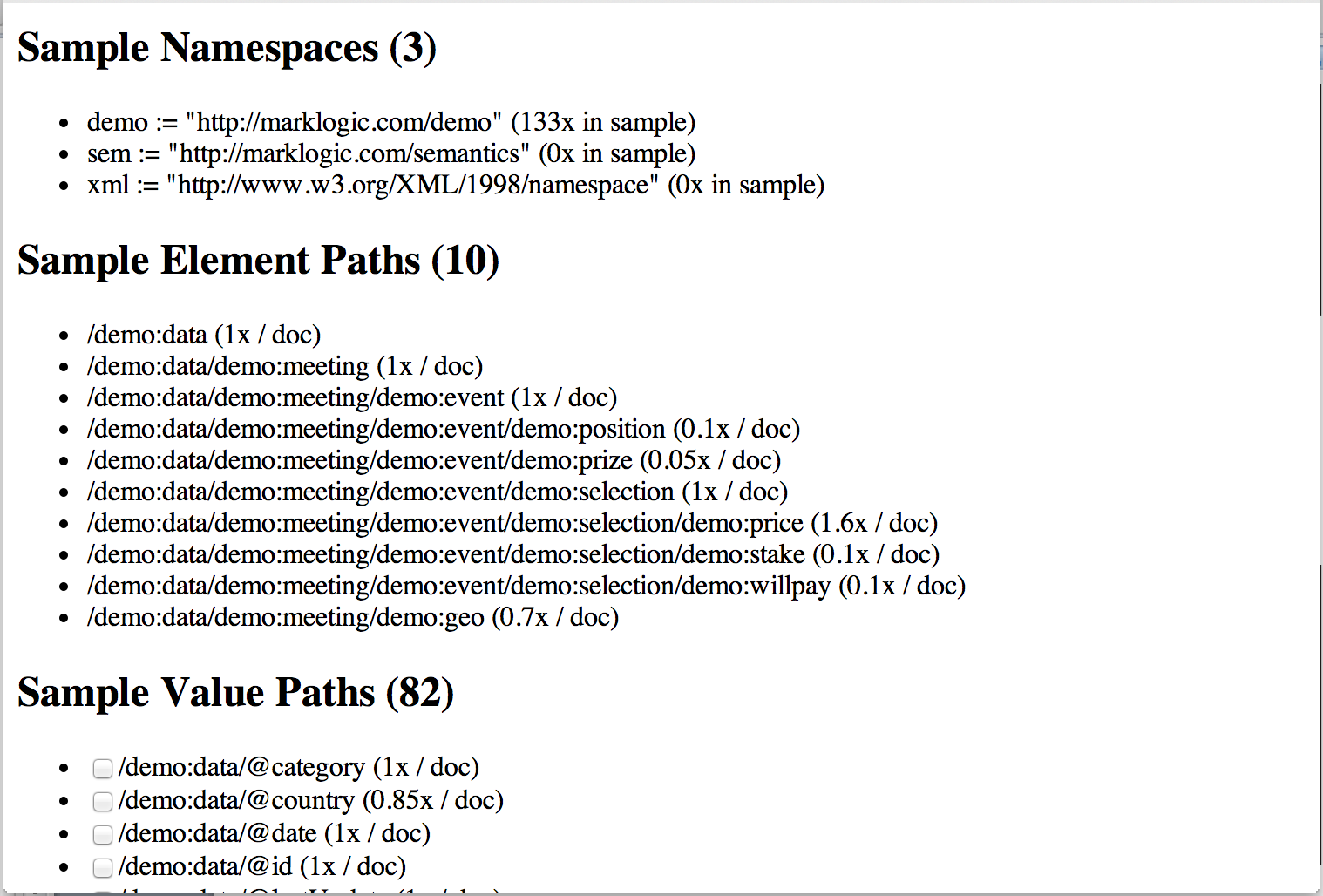

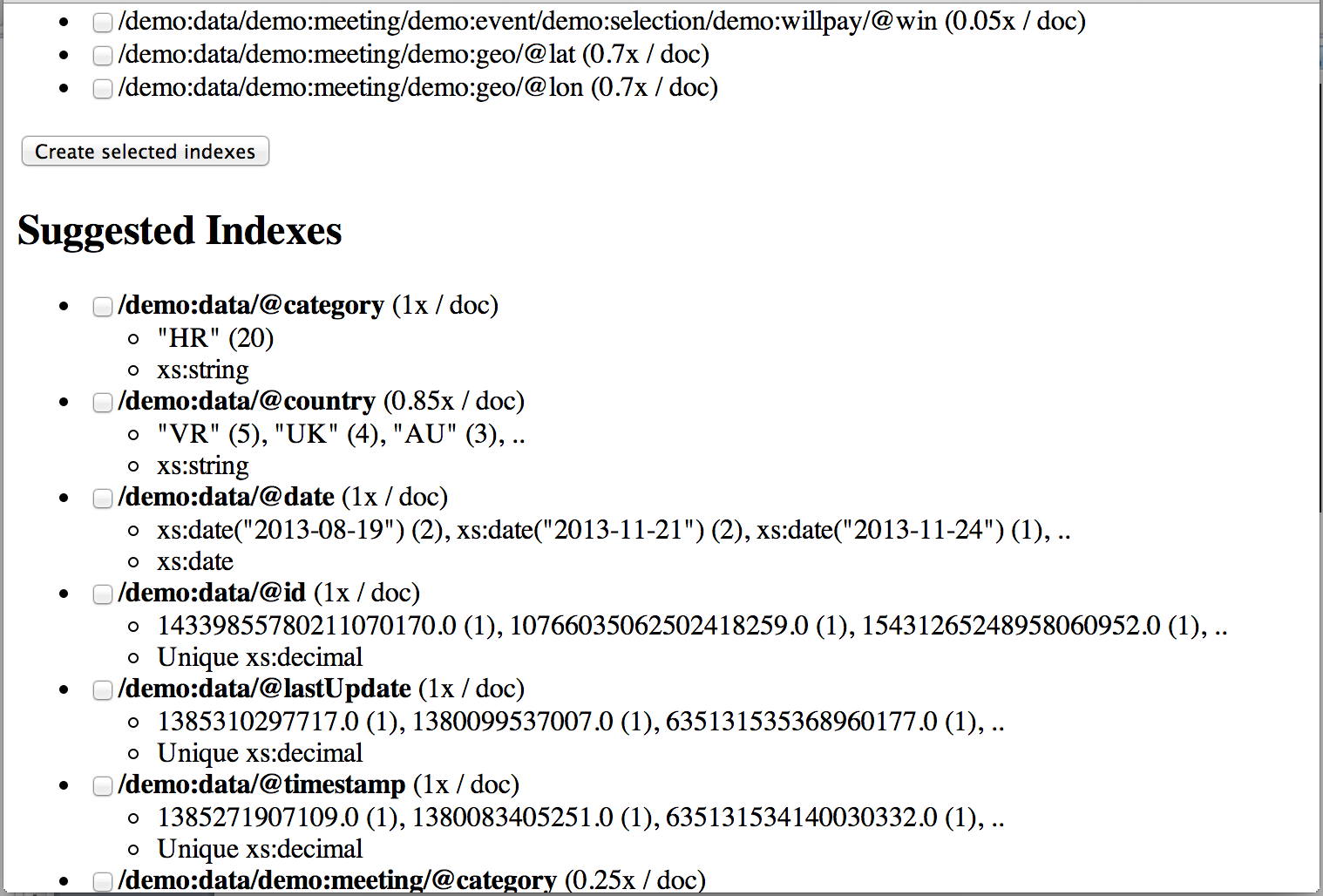

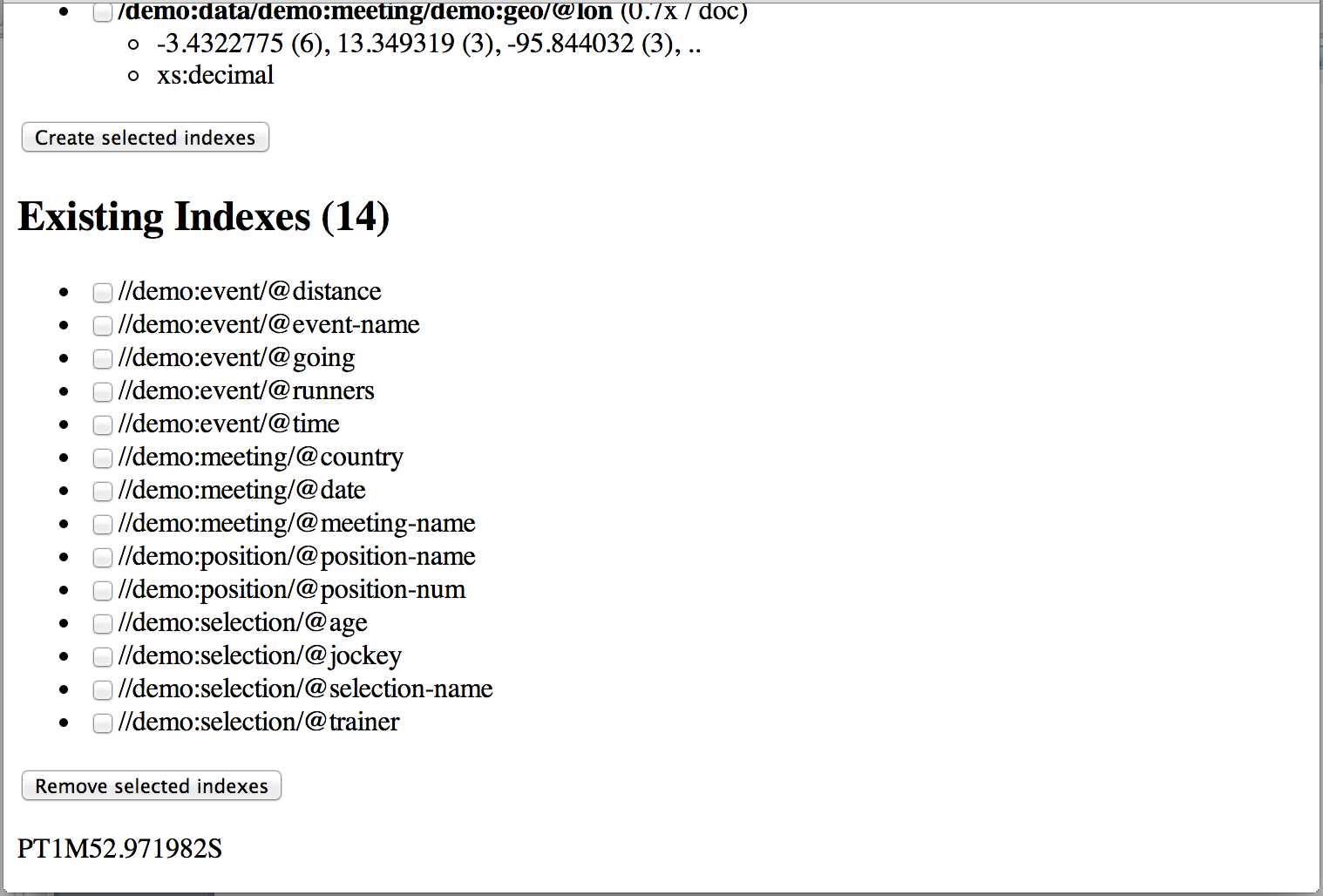

Make it searchable

Since I chose to write the RSS information to XML, I am lacking markers for urls, hashtags, and mentions within the tweet text. Moreover, it is worthwhile to apply some additional enrichments to get more out of the analysis. Also, when you execute above code you will notice that the stamp format coming from VBA is not according to the xs:dateTime format, which is inconvenient. We will fix all of this first. It shouldn’t take much code. Note: The following part shows the most important parts of the code only. A link to the full code can be found at the end of this article.

Let’s start with the sender, assume $t holds an individual tweet. The from element ($t/from) contains both the user id and the user full name, as can be seen from the earlier XML sample. The user id comes first, the full name between braces. You can separate that with a bit of regular expression:

let $user-id := lower-case(replace($t/from, '^([^ \(]+) \(([^\)]+)\)', '$1'))

let $user-name := replace($t/from, '^([^ \(]+) \(([^\)]+)\)', '$2')

The timestamp needs reformatting. The stamp follows Dutch localization in which day comes first, month second, year third. The string also needs a T between date and time, and a Z at the end to pretend we care about time zones. It also needs some extra leading zeros. I used the following regular expressions to fix that:

let $stamp := replace($t/stamp, '^(\d)-(\d+)-', '0$1-$2-')

let $stamp := replace($stamp, '^(\d+)-(\d)-', '$1-0$2-')

let $stamp := replace($stamp, ' (\d):', ' 0$1:')

let $stamp := replace($stamp, '^(\d+)-(\d+)-(\d+) (\d+:\d+:\d+)$', '$3-$2-$1T$4Z')

Identifying mentions within the subject takes a bit more effort, but is still relatively easy with the analyze-string function. Matches are wrapped in a user element, non-matches are passed through:

let $subject :=

for $x in fn:analyze-string($t/subject, '@[a-zA-Z0-9_]+')/*

let $id := lower-case(substring-after($x/text(), '@'))

return

if ($x/self::*:match) then

<user id="{$id}">{$x/text()}</user>

else

$x/text()

The same method is used for hashtags and urls, but with slightly different regular expressions of course. Check out the full code listing to see how I fixed those.

I need to mention one more bit about the urls, though. Urls are usually shortened to save characters. Twitter records the real url as well, but since we rely on the RSS data, we lack that information. I used the MarkLogic Server function xdmp:http-get() to resolve the shortened urls to real urls – Other parsers likely provide alternatives. It essentially comes down to this line, which resorts to the shortened url in case the HTTP GET fails:

let $url := (try { xdmp:http-get($short-url)//*:location/text() } catch ($ignore) { () }, $short-url)[1]

If you look at the full listing, you will notice that I added more. I implemented a primitive caching mechanism to ensures the code doesn’t resolve the same url more than once. I also preloaded the cache to save you from most of the internet access which is slow, and because shortened urls tend to fail after some time.

We are almost there. It can be very interesting to make a distinction between tweets, retweets, and replies. I search for the use of ‘RT’ and ‘@’ to do so:

let $is-retweet := matches($t/subject, '^RT ')

let $is-commented-retweet := matches($t/subject, ' RT ')

let $is-reply := matches($t/subject, '^@')

I make one extra distinction: I noticed that different people can tweet identical messages. I suspect tweet-buttons on internet sites are the cause for that. I count the first as a real tweet, all subsequent ones as kind of retweets, by marking them as duplicates:

let $is-duplicate := exists($tweets/tweet[$t >> .][subject eq $t/subject])

The above expression checks whether the current tweet $t is being preceded by any other tweet ($tweets/tweet[$t >> .]) with identical subject value ($subject eq $t/subject).

The result you get after these enhancements should look more or less like this:

<tweets>

<retweet url="http://twitter.com/jpcs/statuses/58818516685553665">

<from id=”jpcs” name=”John Snelson”>jpcs (John Snelson)</from>

<subject>RT <user id=”stephenbuxton”>@StephenBuxton</user>: Spent last 2 weeks reviewing and rehearsing talks for <tag id=”mluc11”>#MLUC11</tag> (San Francisco April 26-29). Great content - it's going to be a great show!</subject>

<stamp>2011-04-15T11:06:41Z</stamp>

</retweet>

<retweet url="http://twitter.com/jpcs/statuses/58818417511243776">

<from>jpcs (John Snelson)</from>

<subject>RT <user id=”scuengineering”>@SCUEngineering</user>: Interested in non-relational database technologies? "MarkLogic InsideHack 2011" (4/28 - FREE, SF) <url href=”http://mluc11-insidehack.eventbrite.com/”>http://mluc11-insidehack.eventbrite.com/</url></subject>

<stamp>2011-04-15T11:06:18Z</stamp>

</retweet>

…

Apply some statistics and do calculations

First, ask yourself what you would like to know about the tweets. Personally, I am interested in two things about the MLUC11 conference:

- What were the highlights? (according to its tweets)

- Who is telling the most interesting things about it? (and is worth most of following)

Second, these questions needs to be translated to something measurable. For instance: length, volume, start, end, and climax of the trend as a whole. Also: the initiator, top contributors, tweeters that are influential (have large networks), tags and urls that were mentioned, and which of them the most. You could even look at geographical aspects of the trend, provided sufficient information about geographical locations is available. Most comes down to simply counting, and ordering by count. That really is pretty much it. The ‘RSS’ data is raw, but the enriched data makes the analysis rather easy.

Let’s start with a straight-forward question: who contributed the most? It is not about counting Twitter activity alone, but counting original tweets in particular. This is where the distinction between tweets and retweets gets into play. I classified each tweet in one of five categories before, but will compress that into two again for my analysis:

- Tweets: tweets, commented retweets, and replies

- Retweets: uncommented retweets, and duplicates

You could argue about those duplicates, but they are not very original anyhow. Let’s not start on that. ;-)

So, to find out who contributed most, we need to take the full list of unique tweeters, loop over them while counting tweets (and optionally retweets) sent by them, order them by tweet count, and take top n:

let $users := distinct-values($tweets/*/(from/@id | subject/user/@id))

let $users-facet := (

for $user in $users

let $user-tweets := $tweets/*[not(self::retweet or self::duplicate)][from/@id = $user]

let $user-retweets := $tweets/*[self::retweet or self::duplicate][from/@id = $user]

let $tweet-count := count($user-tweets)

let $retweet-count := count($user-retweets)

let $count := $tweet-count + $retweet-count

order by $tweet-count descending, $retweet-count descending, $user

return

<user id="{$user}" count="{$count}" tweets="{$tweet-count}" retweets="{$retweet-count}">@{$user}</user>

)

let $top5-users := $users-facet[1 to 5]

This will give the answer to who contributed most. I call it a facet, since it requires similar calculations as needed for faceted searching. Note: MarkLogic Server has built-in functionality to retrieve such facet information from its indexes, which I choose not to use to keep this article (mostly) engine-independent. It also made it easier for me to fiddle around a bit first. Results will be discussed in next section.

Next question: who was most influential? To do this properly, it would be best to analyze the followers-network of each user to include that into a calculation about the exposure of all of someone’s tweets. But that would involve the Twitter API again. Next to this, a larger size of the network doesn’t guarantee that a larger number of people actually reads the tweets. I therefor prefer to analyze how many people found someone’s tweets interesting. That can be measured quite easily by counting the number of retweets of that person’s tweets. You could also look at the number of times someone is being mentioned, which includes not only retweets, but also replies or other kinds of mentions. The code to calculate the top mentions is following the same pattern as for the users facet, hardly worth mentioning.

We continue with topics and sites: which were most popular (and therefor interesting)? The approach in roughly the same. There is one additional catch though. Some people tend to rave about particular things. Instead of just counting tweets and retweets, I count unique senders of both as well, and use that as first order-by criterion:

let $urls := distinct-values($tweets/*/subject/url/@full)

let $urls-facet := (

for $url in $urls

let $url-tweets := $tweets/*[not(self::retweet or self::duplicate)][subject/url/@full = $url]

let $url-retweets := $tweets/*[self::retweet or self::duplicate][subject/url/@full = $url]

let $tweet-sender-count := count(distinct-values($url-tweets/from))

let $retweet-sender-count := count(distinct-values($url-retweets/from))

let $sender-count := $tweet-sender-count + $retweet-sender-count

let $tweet-count := count($url-tweets)

let $retweet-count := count($url-retweets)

let $count := $tweet-count + $retweet-count

order by $sender-count descending, $tweet-count descending, $retweet-count descending, $url

return

<url full="{$url}" long="{$url}" org="{$url}" count="{$count}" tweets="{$tweet-count}" retweets="{$retweet-count}" senders="{$sender-count}" tweet-senders="{$tweet-sender-count}" retweet-senders="{$retweet-sender-count}">{$url}</url>

)

let $top5-urls := $urls-facet[1 to 5]

The hashtag approach is essentially identical to the urls approach, I’ll skip that. So, this should be enough to answer the question which tags and urls were the most popular.

Last question: who brought the most interesting urls and hashtags forward? Finding the answer to this requires combining facets. It requires taking the top n from both and counting the occurrence of the tweeters. I could also just have counted how many urls and tags someone tweeted, but this highlights the persons that tweeted urls and tags that were found most interesting by the others. The code is not much different from the rest. Look at the full code list to see the details. I excluded the MLUC11 hashtag, since practically all tweets contain it.

Draw graphs and conclusions

Now finally, what *are* those highlights of MLUC11, and who *are* the most interesting contributors?

Well, these were the highlights, according to my analysis:

The top 5 urls:

- http://mluc11-insidehack.eventbrite.com/

(from unique senders: 13, tweets: 7, retweets: 11)

- http://blogs.marklogic.com/2011/04/15/followanyday-mluc11-developer-lounge-labs/

(from unique senders: 11, tweets: 4, retweets: 7)

- http://developer.marklogic.com/events/mluc11-labs-and-lounge-schedule#talks

(from unique senders: 9, tweets: 1, retweets: 8)

- http://developer.marklogic.com/media/mluc11-talks/XSLT-basedWebsitesOnMarkLogic.pdf

(from unique senders: 8, tweets: 1, retweets: 7)

- http://newsletter.marklogic.com/2011/04/live-from-mluc11/

(from unique senders: 5, tweets: 3, retweets: 2)

The first url refers to a sub event of the MLUC11 conference. It is targeted for developers who want to get acquainted with MarkLogic Server, or would like to ask tricky questions to some of the experts.

The second url is a blog post by Pete Aven where he looks forward to the MLUC11 conference, mentioning a few highlights, and giving a brief description of many ‘followanyday’ ML experts and enthusiasts.

The third url points to the MLUC11 schedule.

The fourth url is one particular but pretty awesome presentation about using XSLT within MarkLogic Server to rapidly develop (dynamic) websites.

The fifth is an official news page from Mark Logic announcing the start of the MLUC11 conference. It contains references to Twitter hashtags and Facebook. To the right there is also an interesting list of ‘related posts’. ;-)

The top 5 hashtags:

- #marklogic (from unique senders: 26, tweets: 22, retweets: 16)

- #mluc+mluc12 (from unique senders: 7, tweets: 1, retweets: 6)

- #followanyday (from unique senders: 6, tweets: 1, retweets: 5)

- #tech (from unique senders: 5, tweets: 5, retweets: 6)

- #mluc11burrito (from unique senders: 4, tweets: 3, retweets: 1)

The mentioning of hashtags like #marklogic, #mluc and #mluc12 is not very surprising. The #followanyday hashtag is used to attract attention. It was used together with the followanyday blog post url. The #tech hashtag is used by non-tech people (I guess), in an attempt to push Mark Logic into a particular category. The #mluc11burrito hashtag was used to bring mluc11 visitors together to join in a burrito diner.

The top 5 tweeters:

- @mdubinko (sent tweets: 71, retweets: 1)

- @peteaven (sent tweets: 48, retweets: 8)

- @lisabos (sent tweets: 34, retweets: 1)

- @mattlyles (sent tweets: 14, retweets: 1)

- @ronhitchens (sent tweets: 13, retweets: 1)

The top 5 mentions:

- @mdubinko (in tweets: 6, retweets: 38)

- @marklogic (in tweets: 15, retweets: 20)

- @peteaven (in tweets: 7, retweets: 28)

- @hunterhacker (in tweets: 11, retweets: 5)

- @lisabos (in tweets: 2, retweets: 9)

The top tweeters and mentions are for the most part no surprise. I already predicted in one of my tweets that Micah Dubinko had been retweeted the most. Just keep in mind he tweeted the most about MLUC11, by far. Pete Aven and Lisa Bos tweeted a lot too, so no surprise to see them in the mentions top 5 as well. Whoever is behind the Marklogic account, he did well, and got mentioned second best with ‘just’ 12 tweets and 10 retweets. I tweeted more than mattlyles and ronhichens, but most of them were retweets (by far), while these two made quite a number of original statements from themselves. That is why they rank higher than I. Last but not least: Jason Hunter, aka hunterhacker, one of the experts that also spoke at Mark Logic, got mentioned quite a lot. But that is what you hope to achieve when you are a speaker, right? I'd say he deserves to be in the top 5!

Last but not least, the top 5 most prominent contributors (based on top 5 urls and hashtags):

- @marklogic (tweets: 7, retweets: 3)

- @dscape (tweets: 5, retweets: 6)

- @eedeebee (tweets: 4, retweets: 2)

- @peteaven (tweets: 3, retweets: 4)

- @contentnation (tweets: 3, retweets: 2)

The MarkLogic account scores high obviously, as should. Nuno Job (aka @dscape), Eric Bloch (aka @eedeebee), and Pete Aven are doing well as Mark Logic experts. John Blossom (aka Content Nation) was very fond of the #tech hashtag. Not sure, but he could have been the sole contributor to that hashtag.

To be honest, this wasn't my most brilliant analysis. Changing the sort order can make quite a difference. Also, inclusing a larger top n of urls and tags has large influence on the contributors top 5 as well. But making a brilliant analysis wasn’t really my point, I just hope you enjoy the bits of code I shared..

Don't forget to download the full code listing. It adds some interactivity as well!

https://raw.github.com/grtjn/utilities/master/analyze-tweets.xqy

“XQuery is replacing proprietary middleware languages and Web Application development languages. XQuery is replacing complex Java or C++ programs with a few lines of code…”

“XQuery is replacing proprietary middleware languages and Web Application development languages. XQuery is replacing complex Java or C++ programs with a few lines of code…”  Geert Josten joined in July 2000 as an IT consultant at Daidalos. His interest is wide, but he is most active as a content engineer with an emphasis on XML and related standards. He followed the XML standards from the very beginning and actively contributes to the XML community. Geert is also active as Web and Java developer. Read more articles by him on his

Geert Josten joined in July 2000 as an IT consultant at Daidalos. His interest is wide, but he is most active as a content engineer with an emphasis on XML and related standards. He followed the XML standards from the very beginning and actively contributes to the XML community. Geert is also active as Web and Java developer. Read more articles by him on his